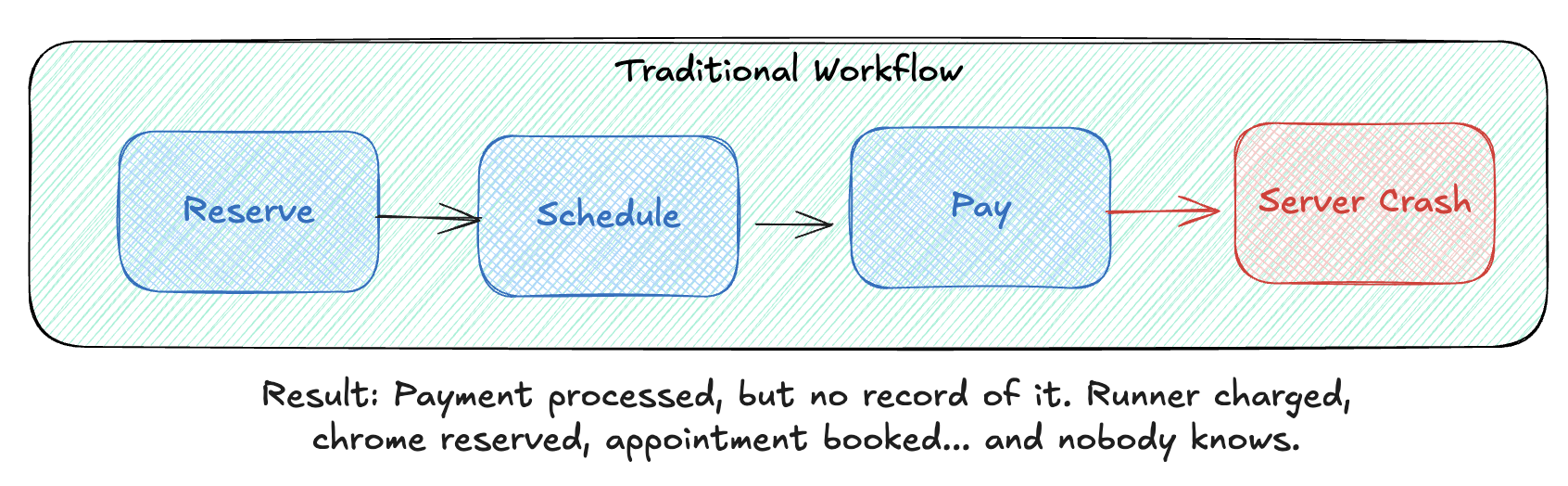

After spending time with enterprise integration patterns, one thing keeps coming up: distributed transactions are hard. When your process spans multiple services, each with its own database, you can’t just wrap everything in a BEGIN/COMMIT. What happens when step 3 of 5 fails? The first two services already committed their changes. You’re stuck in an inconsistent state.

The Saga pattern addresses this with compensating transactions: every step has an “undo” action. If something fails, you run compensations in reverse order to restore consistency. Elegant in theory, but implementing it reliably gets tricky fast. As we saw with webhook delivery, even retrying an API request has hidden complexity. This is where durable execution becomes so critical. I’ve been exploring Temporal, and it really appeals and how it handles these kind of problems while allowing one to focus on their core domain.

Temporal provides durable execution: your code runs to completion even through server crashes, network failures, and deployments. You write what looks like normal functions, and Temporal handles persistence, retries, timeouts, and recovery. Here’s a revisit of that webhook delivery system we wrote previously, implemented in Temporal:

// Configure retry policy once

const { sendHttpRequest } = proxyActivities({

startToCloseTimeout: '30 seconds',

retry: {

initialInterval: '5 minutes',

backoffCoefficient: 2,

maximumAttempts: 5,

},

});

// Your code is just this

async function deliverWebhook(event: WebhookEvent): Promise<void> {

await sendHttpRequest(event.url, event.payload);

}

That’s it. No retry loops, no message queues, no dead letter handling, no database to track attempt counts. Temporal retries failed activities automatically with exponential backoff. If your server crashes mid-retry, execution resumes exactly where it left off. If you deploy new code, running executions continue unaffected. This is durable execution: code that survives infrastructure failures.

I’ve built a demo project to explore these patterns in a more interesting domain than “order processing”: Night City Chrome & Data Services, a cyberpunk underground economy where you can install cyberware, query data brokers, and coordinate heists. The saga handles compensating transactions across multiple services, and payments actually get recorded on a local blockchain.

Why Durable Execution?

Networks fail. Services timeout. Servers crash mid-transaction. Making distributed systems reliable usually means building your own state machine, wiring up queues, implementing retry logic, handling dead letters, ensuring idempotency. All spread across multiple services.

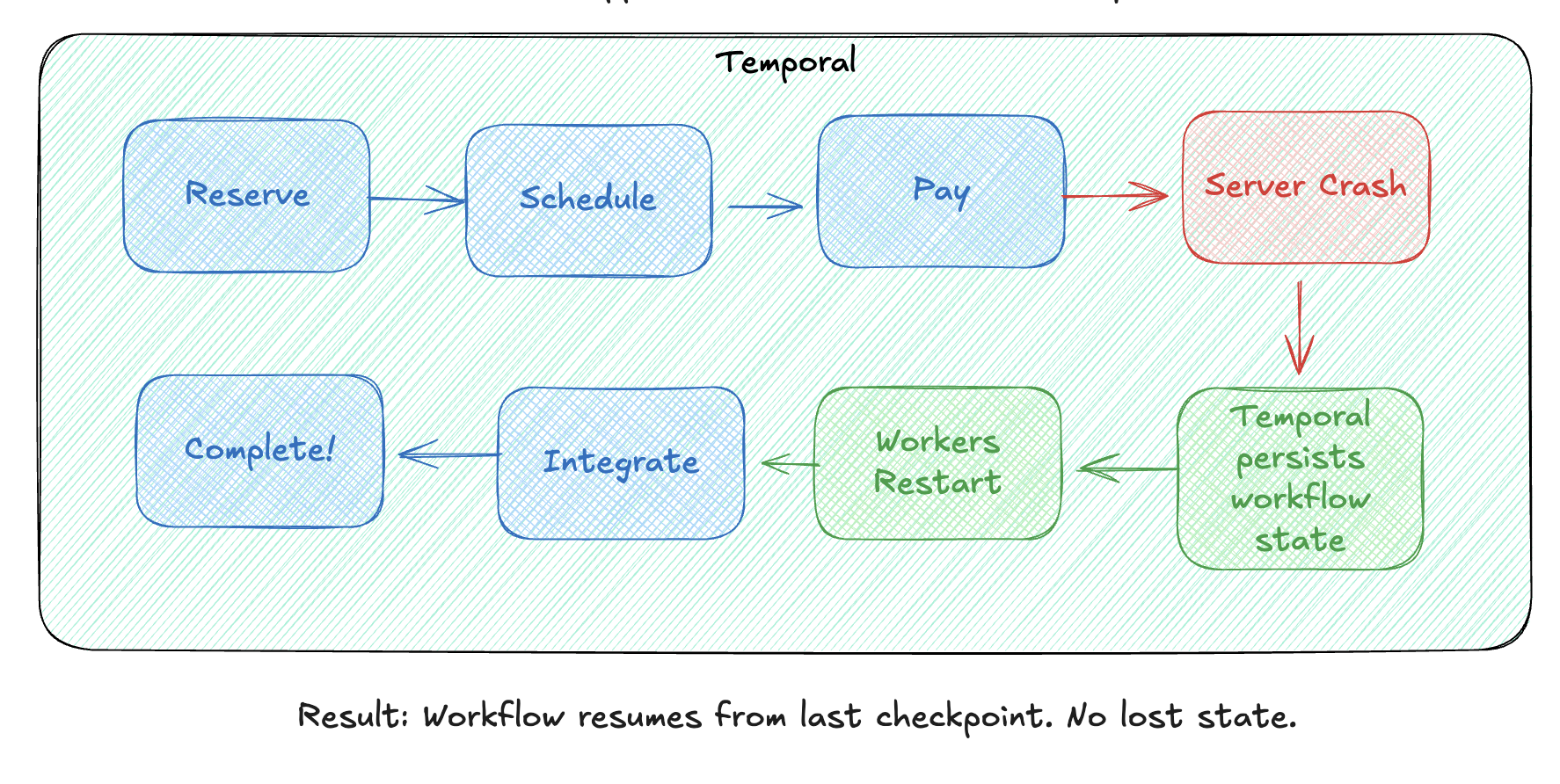

Temporal takes a different approach: you write functions, and Temporal makes them durable. Your code runs to completion regardless of infrastructure failures. Temporal persists state after each step, so if a server crashes, execution resumes from the last checkpoint on any available worker.

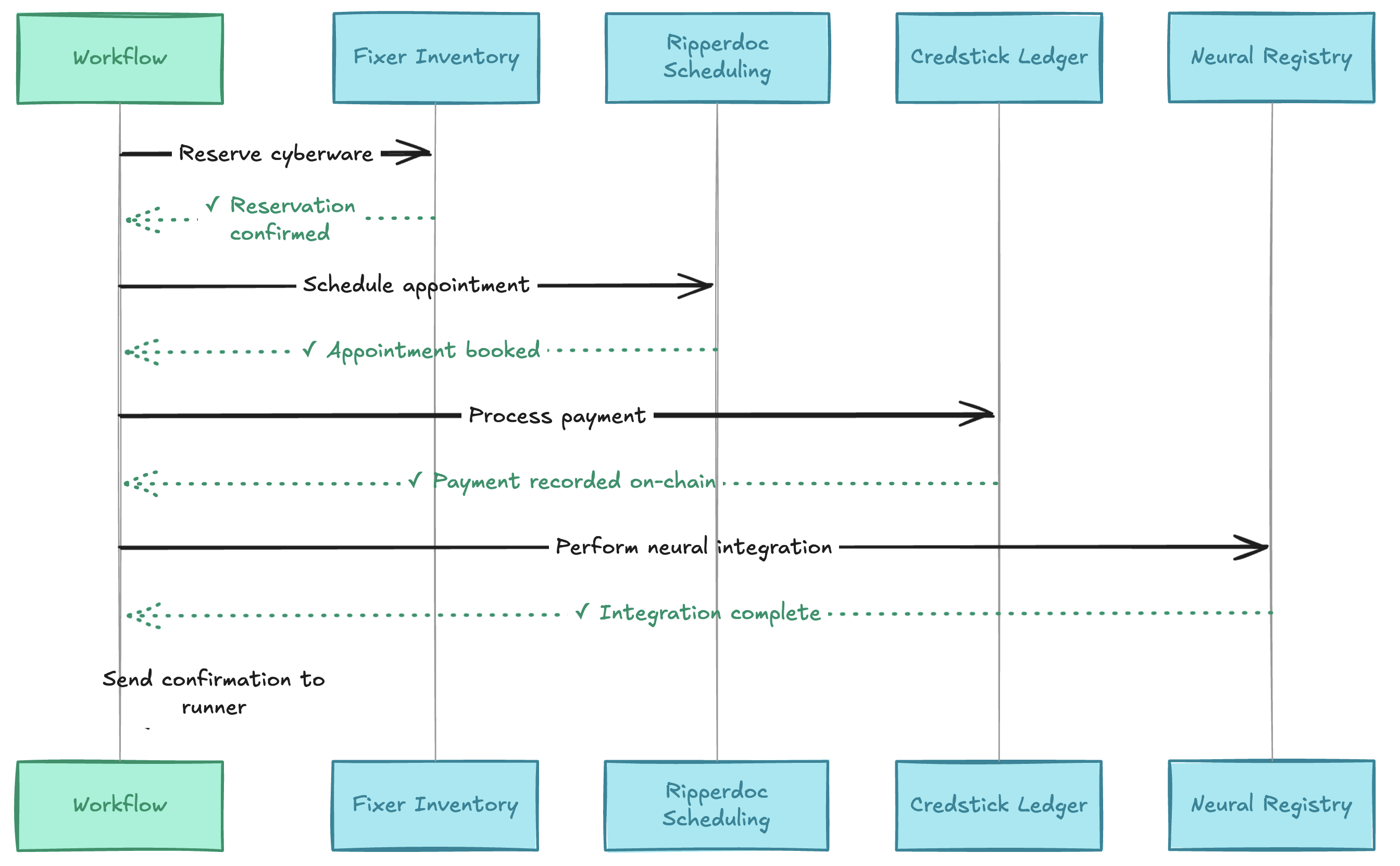

Consider a cyberware installation in Night City. You need to:

- Reserve the chrome from a fixer (external API)

- Schedule a ripperdoc appointment (another service)

- Process payment on the blockchain (yet another system)

- Perform neural integration (can fail catastrophically)

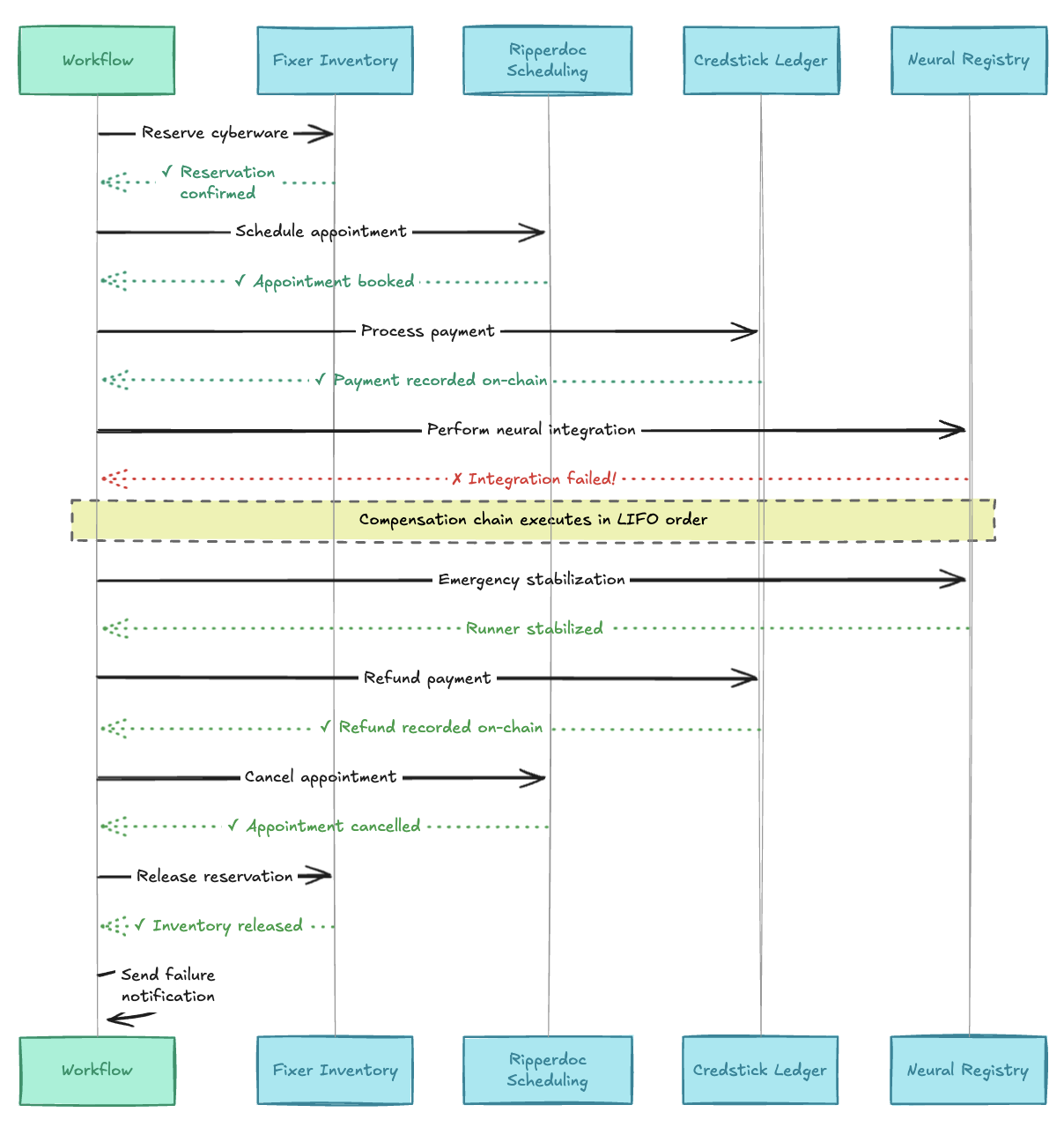

If the neural integration fails (and with experimental chrome, it often does), you need to:

- Refund the payment

- Cancel the appointment

- Release the reservation

Without durable execution, you’d track state in a database, handle partial failures, implement retry logic, and ensure idempotency for each operation. Manageable with two services, but it gets out of hand quickly as you add more.

With Temporal, you write this as a regular function with try/catch. Durable execution ensures it runs to completion, even across server restarts, deployments, or outages.

The Architecture

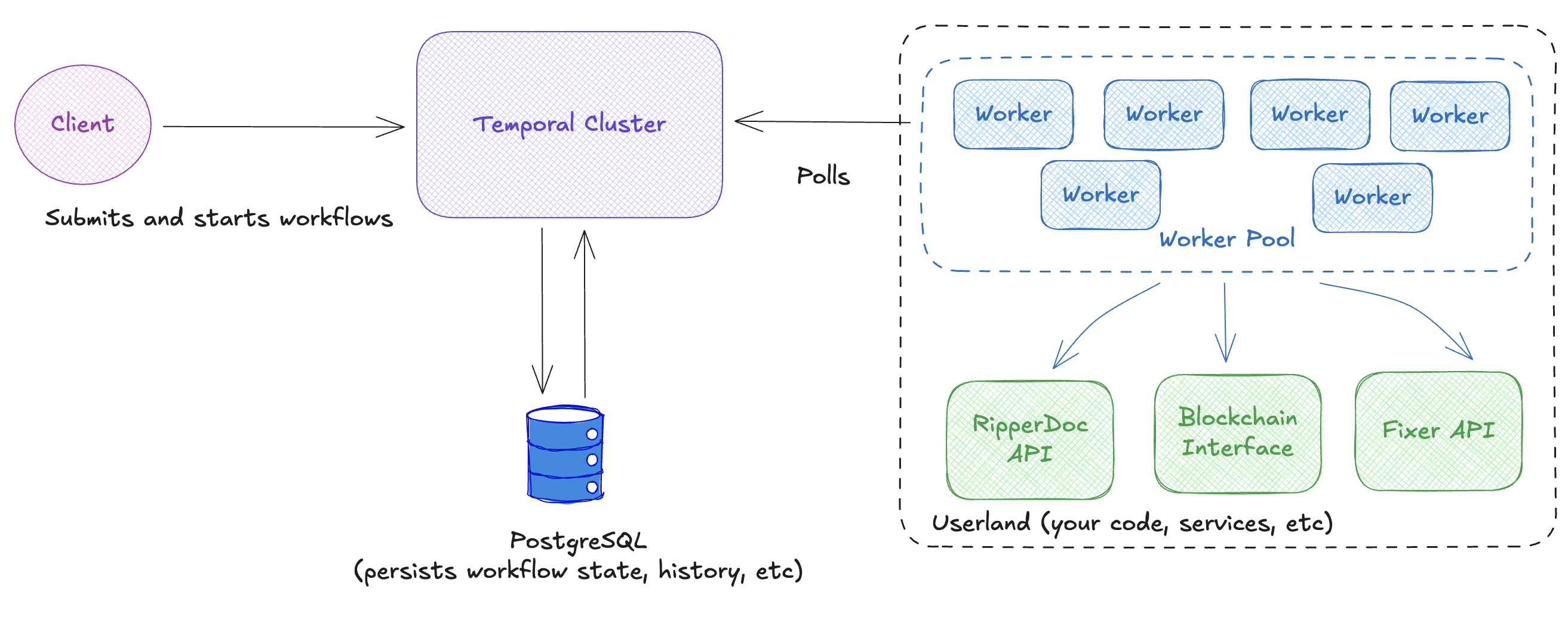

Temporal has four main components:

Your code lives in workflows (orchestration logic) and activities (the actual work: API calls, database writes). Workers are processes you run that poll the Temporal Server for tasks and execute your code. The Temporal Server persists execution state to PostgreSQL, enabling durable execution: if a worker crashes, another picks up exactly where it left off. Clients start workflows, send signals, and query state.

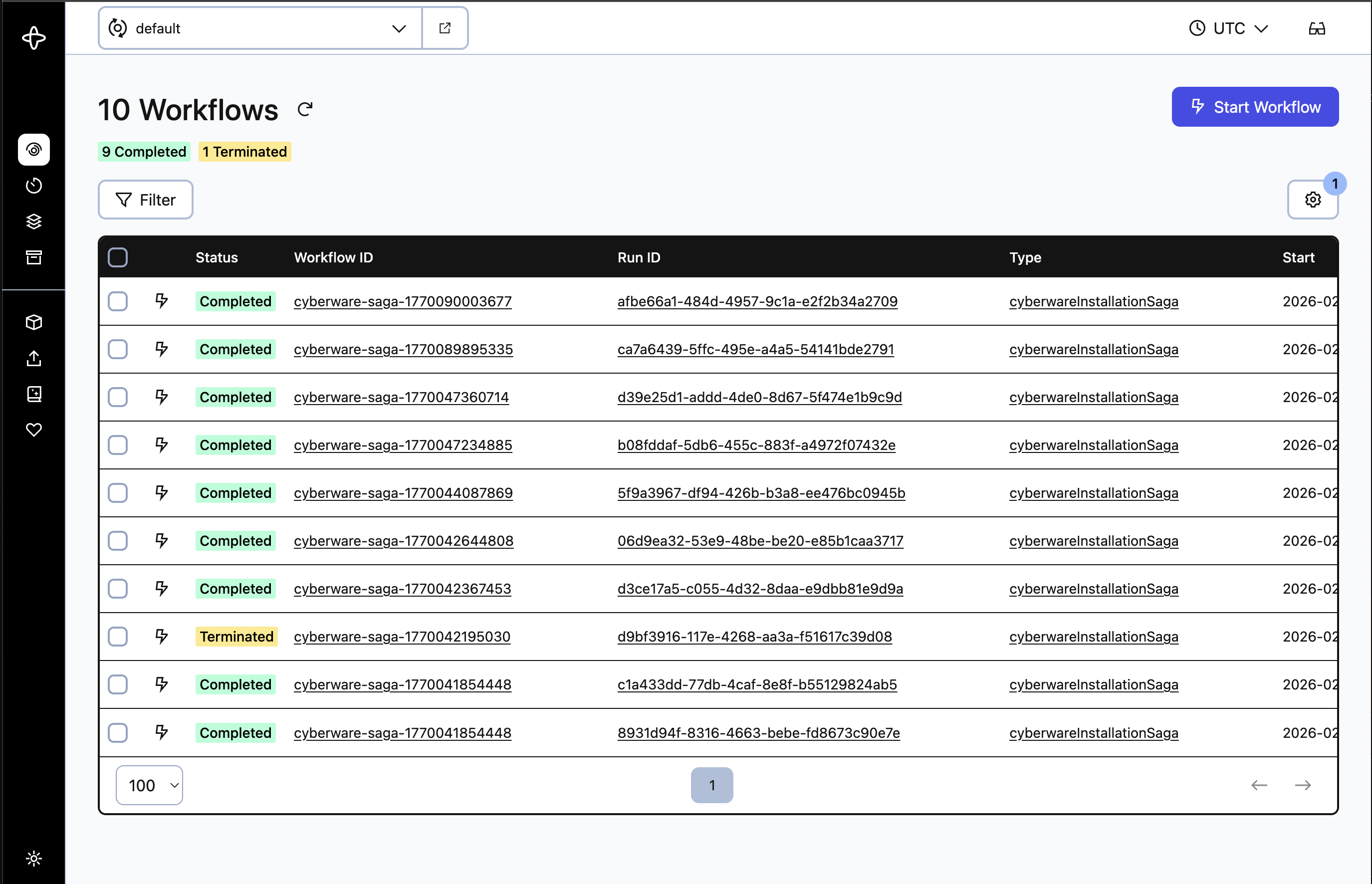

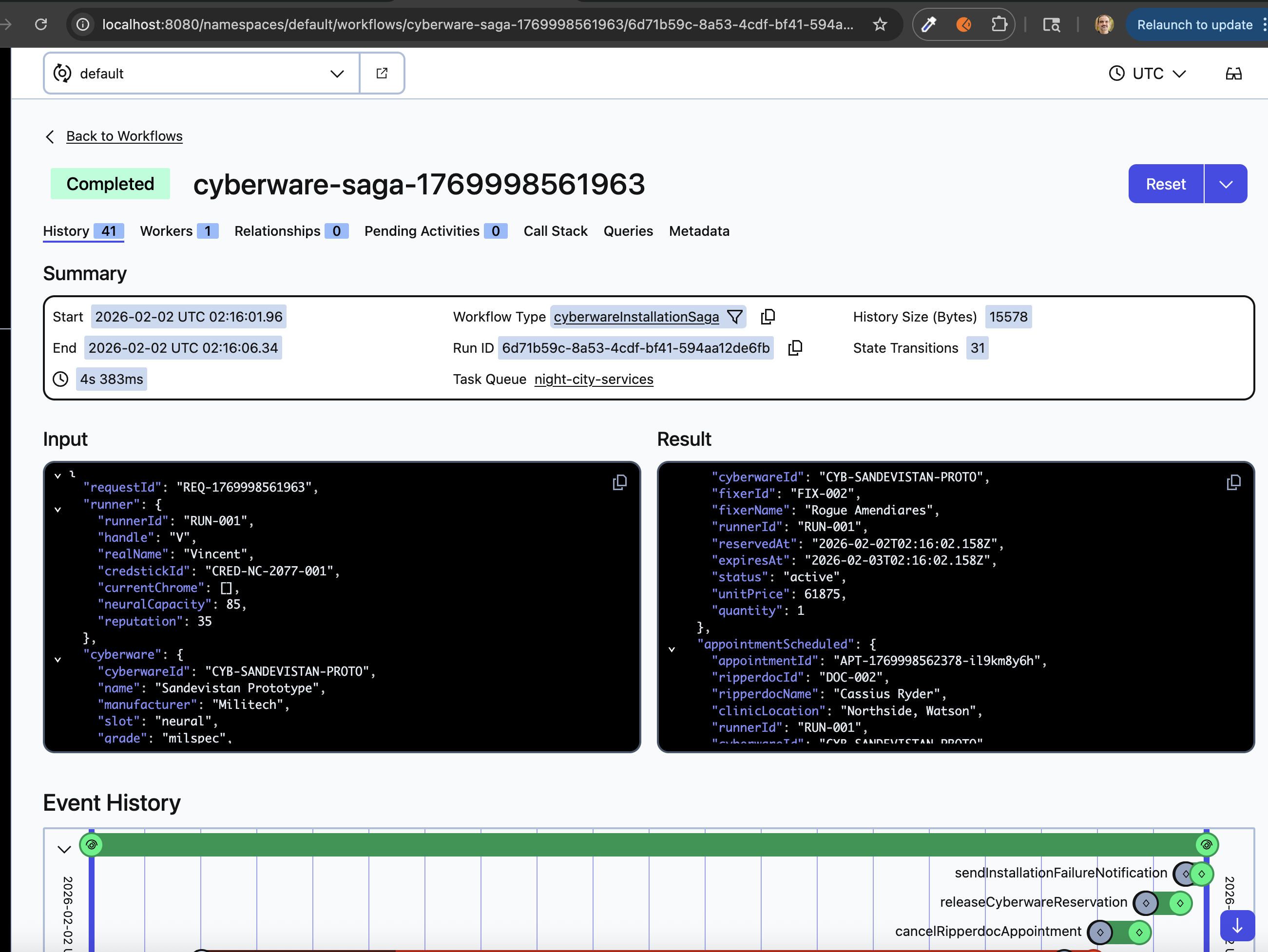

Here’s what a completed execution looks like in the Temporal UI:

Every execution gets its own page showing input, result, and full event history. The timeline at the bottom visualizes each activity. Here you can see the compensation activities (sendInstallationFailureNotification, releaseCyberwareReservation, cancelRipperdocAppointment) that ran after a failure. When something breaks in production, this is where you’ll live.

Let’s dig into how the demo app implements the saga pattern.

Pattern: Saga with Compensating Transactions

Every saga tutorial uses order processing. It’s a fine example, but I wanted something with more texture. In Night City, we’re installing cyberware: chrome that gets wired directly into a runner’s nervous system.

The Domain: Cyberware Installation

Our saga coordinates across four separate persistent systems, each with its own database.

In an ideal world, each and every transaction succeeds.

However in a scenario where neural integration fails, each compensation is triggered in reverse order:

If neural integration fails, we need to roll back across all four systems. That’s the saga’s job.

The Code

Here’s the heart of the saga. In Temporal, you write “workflows” (the orchestration logic) that call “activities” (the actual work). Notice how it looks like normal TypeScript:

export async function cyberwareInstallationSaga(

request: CyberwareInstallationRequest

): Promise<CyberwareInstallationResult> {

const result: CyberwareInstallationResult = {

requestId: request.requestId,

success: false,

compensationsExecuted: [],

totalCost: 0,

totalRefunded: 0,

};

// Compensation stack - executed in reverse order on failure

const compensations: Array<{ name: string; execute: () => Promise<void> }> = [];

try {

// Step 1: Reserve cyberware from fixer

const reservation = await reserveCyberware(request);

compensations.push({

name: 'Release inventory reservation',

execute: async () => {

await releaseCyberwareReservation(reservation.reservationId, 'installation_failed');

},

});

// Step 2: Schedule ripperdoc appointment

const appointment = await scheduleRipperdocAppointment(request, reservation);

compensations.push({

name: 'Cancel ripperdoc appointment',

execute: async () => {

await cancelRipperdocAppointment(appointment.appointmentId, 'Installation failed');

},

});

// Step 3: Process payment

const payment = await processCredstickPayment(request, reservation, appointment);

compensations.push({

name: 'Refund credstick payment',

execute: async () => {

await refundCredstickPayment(payment.transactionId, 'Installation failed');

},

});

// Step 4: Neural integration (the dangerous part!)

const integration = await performNeuralIntegration(request, appointment);

// Success!

await sendInstallationConfirmation(request, integration);

result.success = true;

return result;

} catch (error) {

// Execute compensations in REVERSE ORDER

for (let i = compensations.length - 1; i >= 0; i--) {

await compensations[i].execute();

result.compensationsExecuted.push(compensations[i].name);

}

await sendInstallationFailureNotification(request, error.message, result.compensationsExecuted);

return result;

}

}

Full code: cyberware-saga.ts

The code reads like what it does. I’ve seen teams avoid distributed architectures entirely because the operational complexity felt too high. But when distributed logic looks like a regular function with try/catch, and durable execution guarantees it runs to completion, that calculus changes.

Activities: The Actual Work

Activities are where side effects happen: API calls, database writes, etc. The Fixer Inventory activity calls a real FastAPI service that intentionally returns 429 (rate limit) for the first 3 requests to demonstrate Temporal’s automatic retry.

We keep the HTTP client logic in a separate service module (fixer-api.ts) and let activities focus on orchestration:

// services/fixer-api.ts - Simple API client

export async function createReservation(request: ReservationRequest): Promise<Reservation> {

const response = await fetch(`${FIXER_API_URL}/reservations`, {

method: 'POST',

headers: { 'Content-Type': 'application/json' },

body: JSON.stringify({ runner_id: request.runnerId, /* ... */ })

});

if (!response.ok) {

// Just throw any Error - Temporal retries it automatically

throw new Error(`Fixer API error: ${response.status}`);

}

return await response.json();

}

// activities/cyberware-activities.ts - Activity uses the service

export async function reserveCyberware(

request: CyberwareInstallationRequest

): Promise<InventoryReservation> {

// Just call the service - errors propagate naturally.

// Temporal retries ANY thrown Error based on your retry policy.

return await createReservation({

runnerId: request.runner.runnerId,

cyberwareName: request.cyberware.name,

// ... more fields

});

}

Notice what’s not here: no custom error classes, no retry logic, no state tracking. Temporal retries any thrown Error automatically based on your policy configuration (initialInterval, backoffCoefficient). Just throw, and it handles the rest.

For business errors that shouldn’t be retried, use Temporal’s ApplicationFailure:

import { ApplicationFailure } from '@temporalio/activity';

if (runner.credits < totalCost) {

// This error type is in our nonRetryableErrorTypes list - won't be retried

throw ApplicationFailure.nonRetryable(

'Runner cannot afford this chrome',

'InsufficientFunds'

);

}

So there are really only two categories:

- Retryable (default): throw any

Error, Temporal retries per your policy - Non-retryable: throw

ApplicationFailure.nonRetryable()with a type in yournonRetryableErrorTypes

Full code: fixer-api.ts | cyberware-activities.ts

The activity throws an error on 429, and Temporal’s retry policy handles the rest:

const { reserveCyberware, releaseCyberwareReservation /* ... */ } = proxyActivities<typeof activities>({

startToCloseTimeout: '60 seconds',

retry: {

initialInterval: '10 seconds', // Match the Retry-After header

backoffCoefficient: 1, // No backoff - use fixed intervals

maximumAttempts: 5, // More than the 3 failures we expect

},

});

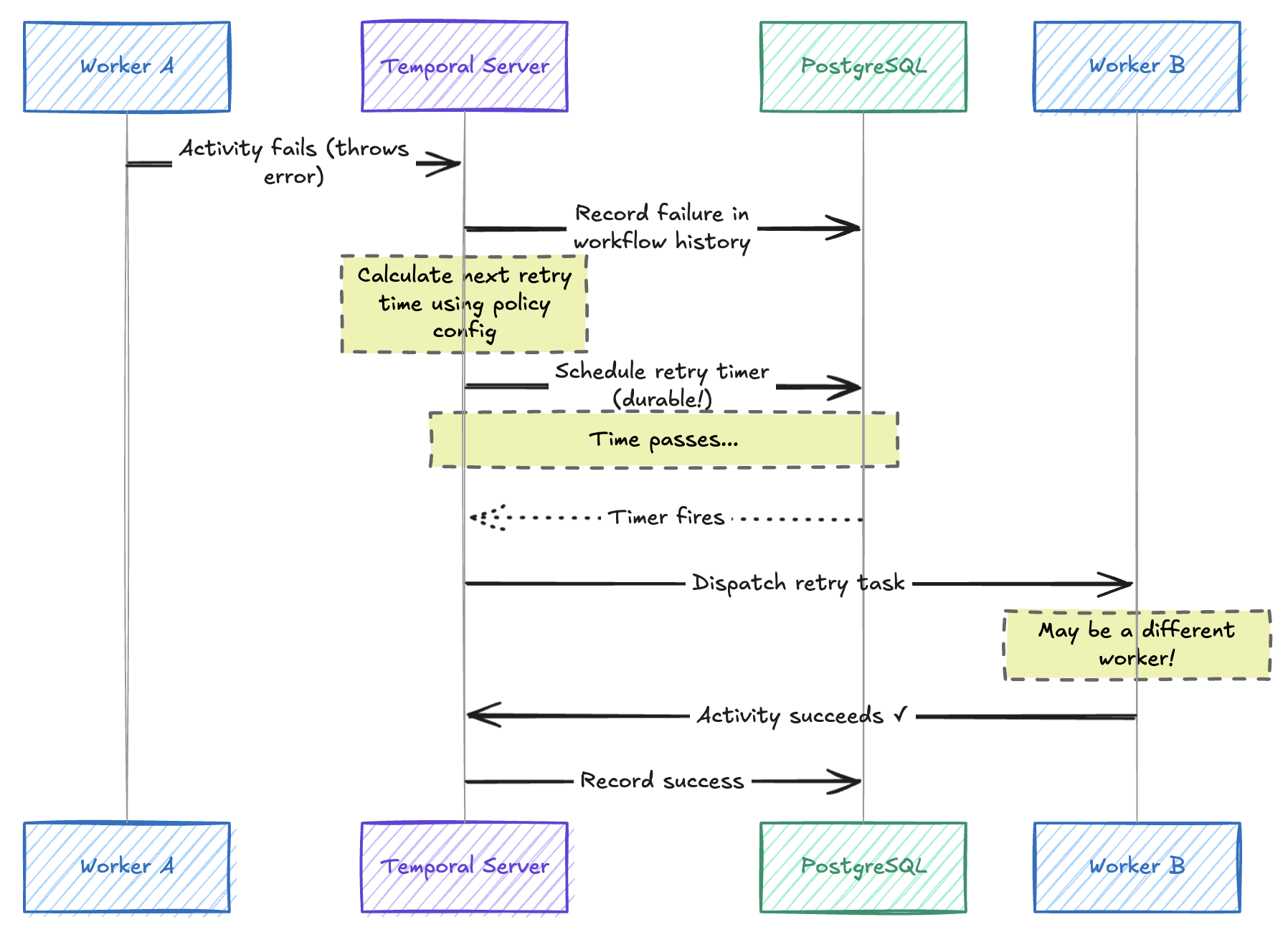

How Durable Retries Work

When an activity throws an error, Temporal doesn’t just blindly retry. Here’s what happens under the hood:

The retry timer lives in the database, not in worker memory. If Worker A crashes, Worker B picks up the retry. This is durable execution in action, and it’s why activities should be idempotent.

What makes this different from in-memory retries:

Durable timers: The retry delay is stored in Temporal’s database, not in memory. If your worker crashes, the retry still happens.

Exponential backoff: With

backoffCoefficient: 2, intervals double: 1s → 2s → 4s → 8s. We use1for fixed intervals matching the API’sRetry-After.Any worker can retry: The retry task goes to whatever worker is available. The same activity might run on different machines, which is why activities must be idempotent.

Full history: Every attempt is recorded. In the Temporal UI, you can see each failure, the delay, and the eventual success.

Non-retryable errors: You can mark certain errors (like

InsufficientFunds) as non-retryable so Temporal fails fast instead of wasting time.

You write a simple HTTP call. Durable execution handles retries with backoff, timeouts, and persistent state. If your worker crashes mid-retry, another worker resumes exactly where it left off.

Watching It Fail (and Recover)

The neural integration has a ~25% failure rate with experimental chrome. Here’s what a failed run looks like. Notice how Temporal handles the Fixer API’s rate limiting before we even get to the failure:

═══════════════════════════════════════════════════════════════════════

CYBERWARE INSTALLATION SAGA - Sandevistan Prototype

Runner: V | Grade: milspec

═══════════════════════════════════════════════════════════════════════

▶ STEP 1: Reserving cyberware from fixer...

[FIXER INVENTORY] Calling Fixer API at http://localhost:8000...

[FIXER INVENTORY] ⚠ Rate limited by fixer. Retry after 10s

... Temporal retries after 10s ...

[FIXER INVENTORY] ⚠ Rate limited by fixer. Retry after 10s

... Temporal retries after 10s ...

[FIXER INVENTORY] ⚠ Rate limited by fixer. Retry after 10s

... Temporal retries after 10s ...

[FIXER INVENTORY] ✓ Reserved Sandevistan Prototype from Wakako Okada. RSV-1706531234

▶ STEP 2: Scheduling ripperdoc appointment...

[RIPPERDOC] ✓ Appointment with Viktor Vektor. Location: Watson, Little China

▶ STEP 3: Processing credstick payment...

[CREDSTICK LEDGER] ✓ Payment processed. Transaction: TXN-1706531235, €$78,125.00

▶ STEP 4: Performing neural integration...

[NEURAL REGISTRY] Beginning neural integration for V

[NEURAL REGISTRY] Administering anesthetics...

[NEURAL REGISTRY] Mapping neural pathways...

[NEURAL REGISTRY] Installing cyberware housing...

[NEURAL REGISTRY] ✗ INTEGRATION FAILED! Compatibility: 62% (needed 75%)

!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!

✗ SAGA FAILED - INITIATING COMPENSATION

!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!

⚠ EMERGENCY: Neural integration failure detected

[NEURAL REGISTRY] Flooding system with neural suppressants...

[NEURAL REGISTRY] ✓ Stabilization successful. Neural damage: 5%

▶ Executing compensation chain (LIFO order)...

[1/3] Refund credstick payment

[CREDSTICK LEDGER] ✓ Refund processed. €$74,218.75 (fee: €$3,906.25)

[2/3] Cancel ripperdoc appointment

[RIPPERDOC] ✓ Appointment cancelled. Deposit forfeited.

[3/3] Release inventory reservation

[FIXER INVENTORY] ✓ Released. Restocking fee: €$11,718.75

═══════════════════════════════════════════════════════════════════════

SAGA COMPENSATION COMPLETE

Total charged: €$78,125.00

Total refunded: €$74,218.75

Net cost to runner: €$3,906.25

═══════════════════════════════════════════════════════════════════════

The Temporal UI shows the complete execution history, including each compensation step

The Temporal UI shows the complete execution history, including each compensation step

Viewing Execution History

If you’ve read the Enterprise Integration Patterns posts, you’ll recognize Message History and Message Store here. Temporal gives you both out of the box. Every activity call, every retry, every compensation gets recorded:

Every step is recorded with timestamps, inputs, outputs, and any errors

Every step is recorded with timestamps, inputs, outputs, and any errors

Try It Yourself

The complete project is available at github.com/jamescarr/night-city-services.

# Clone the repo

git clone https://github.com/jamescarr/night-city-services.git

cd night-city-services

# Start Temporal server + Night City services

docker compose up -d

# Install dependencies

pnpm install

# Start the worker (in one terminal)

pnpm run worker

# Run the saga (in another terminal)

pnpm run saga

Run pnpm run saga multiple times to see both successful installations and rollbacks with on-chain refunds!

What’s Running

The docker-compose for this project runs all the infra for Temporal as well as the various services our code integrates with.

- Temporal Server - Durable execution engine (gRPC on 7233)

- Temporal UI - http://localhost:8080 (sometimes requires a start after other services are running)

- PostgreSQL - Temporal persistence

- Fixer API (Python) - Returns 429s to demonstrate retries

- Ripperdoc API (Elixir) - Appointment scheduling

- Ganache - Night City blockchain (Chain ID: 2077)

- Blockchain Explorer - http://localhost:8800

What’s Next

Sagas are just one pattern. In upcoming posts, I’ll implement two more from my Enterprise Integration Patterns series to demonstrate implementation in Temporal.

Scatter-Gather (covered in Message Routing): Query multiple data brokers in parallel and aggregate the results. Perfect for when you need quotes from five different fixers and want the best deal.

Process Manager (covered in its own post): A state machine that responds to external signals in real-time. We’ll coordinate a heist against Arasaka Tower, with the ability to abort mid-operation when MaxTac shows up.

Both patterns are already implemented in the demo repo if you want to peek ahead:

pnpm run scatter # Scatter-Gather

pnpm run heist # Process Manager

pnpm run heist:abort # Process Manager with abort signal

References

- What is Durable Execution?

- Temporal Documentation

- Temporal TypeScript SDK

- Night City Services Demo

- Enterprise Integration Patterns

- Saga Pattern

“The street finds its own uses for things.” - William Gibson, Neuromancer