Since returning to Zapier, I’ve been building the features enterprise customers crave for managing automation at scale. Event-driven architectures, message routing, guaranteed delivery, audit trails. The stuff that enterprise buyers expect as table stakes. It’s reminded me how much this foundational knowledge matters.

One thing keeps coming up: audit logging. Every enterprise customer wants to know what happened, when, and by whom. It sounds simple until you’re building it for the fifth time, wiring up the same patterns to yet another data store. There has to be a better way.

This year, I’m doing something different for the holidays. Instead of Advent of Code, I want to spend twelve days digging into fundamental messaging patterns. The kind of pattern literature from my Java days that I believe is more important today than ever before.

Chief among these is Enterprise Integration Patterns by Gregor Hohpe and Bobby Woolf. Published in 2003, it predates the microservices revolution, the rise of Kafka, and the cloud-native movement. Yet its patterns remain just as relevant today, perhaps even more so than when it was written. It’s one of those rare books that reshaped how I think about building software.

A Book That Shaped My Career

I stumbled upon EIP early in my career when I was drowning in integration complexity. The landscape was brutal: SOAP services talking to legacy proprietary systems, Spring-based Java applications that needed to communicate with PL/1 systems running on OpenVMS, and a sprawling mess of cron jobs that had accumulated over years of “just add another script” solutions.

The cron job situation was particularly painful. We had vehicle history report data flowing through a Rube Goldberg machine of scheduled tasks, each one dependent on the previous having completed, with no visibility into failures and no way to retry individual steps. Data that should have been available in minutes was taking days to appear on reports. Customers were frustrated. We were frustrated.

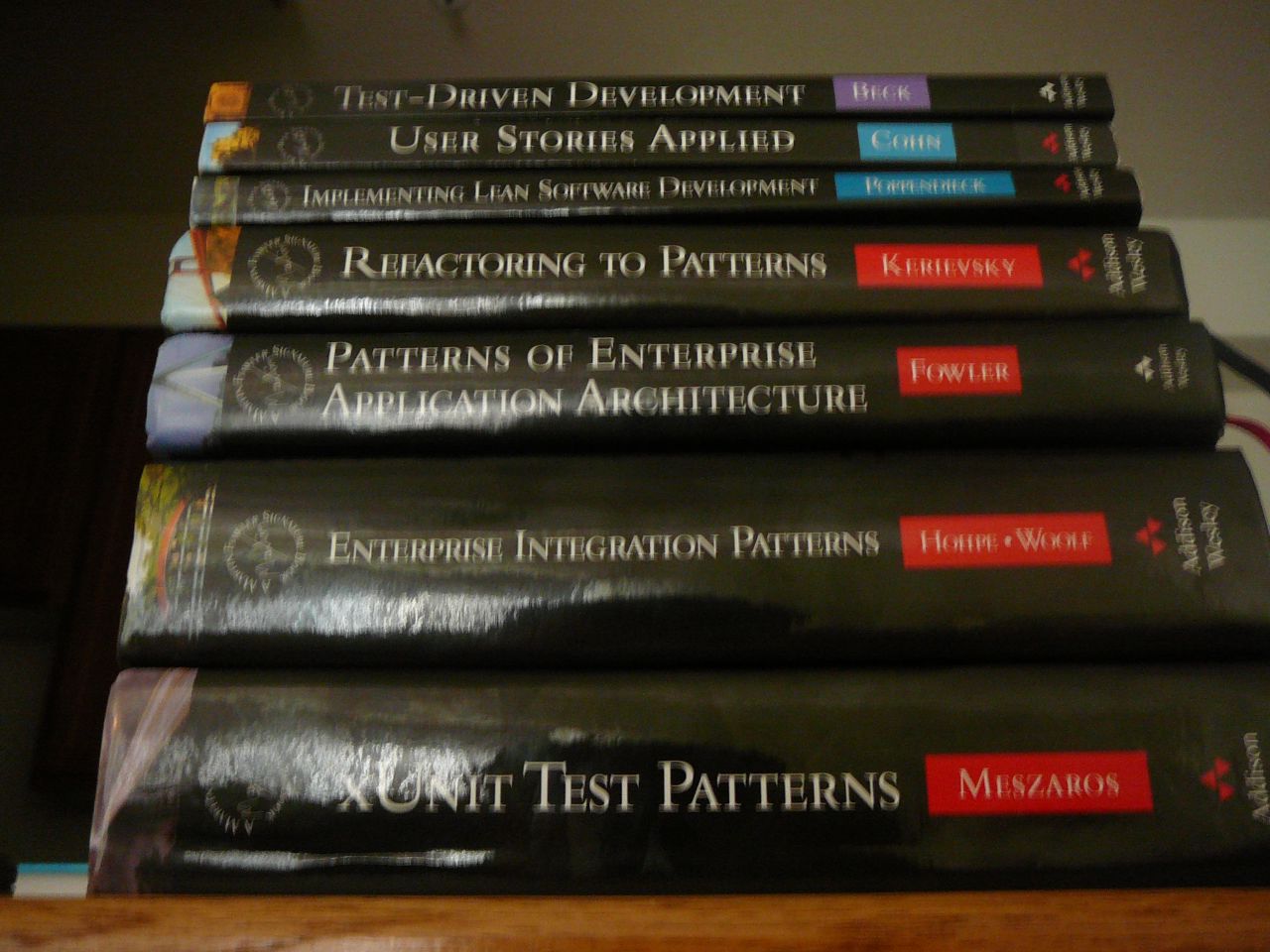

At the time, I was on a Martin Fowler Signature Series reading binge. I’d worked my way through Refactoring, then Patterns of Enterprise Application Architecture, followed by Refactoring to Patterns. Each book had leveled up my thinking, but when I picked up Enterprise Integration Patterns, something different clicked.

Here was a vocabulary for talking about integration problems. Suddenly, the chaos had names: these weren’t just “message queues” and “web services.” They were Message Channels, Message Routers, Channel Adapters, and Message Translators. The patterns gave me a mental framework for breaking down complex integration challenges into manageable, well-understood pieces.

I introduced RabbitMQ and started applying the patterns systematically. The cron job nightmare became an event-driven pipeline. Data that once took days now flowed through in minutes. The PL/1 systems on OpenVMS? We wrapped them with Channel Adapters that spoke AMQP. The SOAP services? Message Translators converted their XML payloads into our canonical format. Different processing needs? Content-Based Routers directed messages to the appropriate handlers.

That was over fifteen years ago, and I’ve returned to this book countless times since. Whether building event-driven architectures at scale, designing webhook systems, or architecting audit trails across distributed systems, the patterns in EIP have been my constant companion.

Why Revisit These Patterns Now?

The software landscape has transformed dramatically since 2003. We’ve moved from ESBs and SOAP to Kafka and gRPC. From monoliths to microservices. From on-prem to serverless. Yet the fundamental challenges of system integration remain unchanged:

- How do you reliably move data between systems?

- How do you transform messages as they flow through your architecture?

- How do you handle failures gracefully?

- How do you build systems that can evolve independently?

If anything, the proliferation of services and the rise of event-driven architecture has made these patterns more critical. Understanding the Publish-Subscribe Channel isn’t just academic. It’s essential for working with Kafka, SNS, or any modern event streaming platform. The Dead Letter Channel pattern is literally built into SQS. Message Translators are what we now call “adapters” or “transformers” in our data pipelines.

The Agentic Future

And then there’s AI. We’re entering an era of agentic systems: autonomous agents that perceive, reason, and act. These agents need to communicate with each other, coordinate complex workflows, call external tools, and handle failures gracefully. If that sounds familiar, it should.

When you strip away the LLM magic, an AI agent orchestration system is fundamentally a messaging system:

- Agents exchange messages with each other and with tools (Message, Message Channel)

- Requests fan out to multiple specialized agents (Publish-Subscribe, Recipient List)

- Results aggregate back from parallel processing (Aggregator, Scatter-Gather)

- Failures happen and need graceful handling (Dead Letter Channel, Retry patterns)

- Context flows through multi-step reasoning chains (Correlation Identifier, Message History)

- Inputs transform between different agent formats and tool APIs (Message Translator)

Frameworks across the ecosystem are implementing these same patterns, often without naming them. Python has LangGraph and DSPy. Java developers have Spring AI. .NET has Semantic Kernel. The Elixir community has Instructor for structured outputs. And honestly, OTP’s supervision trees and message passing provide primitives that map naturally to agent orchestration. That’s one reason I’m excited to explore this in Gleam. Understanding EIP gives you a vocabulary and mental model for designing robust agent architectures, regardless of which framework or language you use.

I think it’s time to re-examine these patterns through a modern lens and see how they can help us build robust, scalable systems. Whether those systems are processing audit events, coordinating microservices, or orchestrating AI agents.

The Project: Chronicle

Rather than explore these patterns in isolation, I want to build something real. Over the next 12 days, I’ll be constructing Chronicle, an open-source audit logging system you can actually use.

The goal? A drop-in solution for SaaS products that need customer-facing audit logs. Wire Chronicle to your favorite data store, and your users get a full audit trail of what happened in their account. No more building audit logging from scratch for each product.

Why audit logging? Beyond being useful, it’s a perfect domain for exploring integration patterns:

- Multiple producers: Different services need to emit audit events

- Multiple consumers: Events need to flow to various storage backends and analytics systems

- Transformation requirements: Events from different sources need normalization

- Reliability demands: You can’t lose audit data

- Extensibility needs: New storage backends should be easy to add

Why Gleam?

For the implementation, I’m choosing Gleam, a type-safe functional language that runs on the Erlang VM (BEAM). Why Gleam for an integration patterns project?

- The BEAM is built for this: Erlang was literally designed for building reliable, distributed, fault-tolerant systems. Message passing is in its DNA. See The Soul of Erlang and Elixir for why this matters.

- Type safety with pragmatism: Gleam’s type system catches errors at compile time while remaining approachable. Check out Gleam’s Language Tour to see it in action.

- Interop with the ecosystem: Access to the vast OTP library ecosystem and the ability to call Erlang/Elixir code.

- It’s fun: Sometimes that matters.

Each day, I’ll provide implementations in Gleam for Chronicle, but I’ll also include conceptual explanations and examples in more accessible languages (TypeScript, Python) so the patterns themselves remain the focus.

The 12 Days of Enterprise Integration Patterns

Here’s the roadmap for our journey. Each day builds upon the previous, constructing a more complete and robust system.

A note on plans: Software development rarely follows a straight line, and neither will this series. While I’ve laid out a roadmap below, we may discover that certain topics deserve more depth, or that the natural flow of building Chronicle takes us in a slightly different direction. I’ll do my best to stay on course, but consider this outline a guide rather than a contract. The patterns we cover will remain the same; the order and groupings might shift as we learn together.

We might also take occasional detours to explore frameworks that have already implemented these patterns. Apache Camel has been doing this for nearly two decades. Redpanda Connect (formerly Benthos) offers a modern, config-driven approach. These tools are worth understanding even if we’re building Chronicle from scratch.

Day 1: Integration Styles & Bootstrapping Chronicle

We’ll start at the very beginning: the four fundamental integration styles. File Transfer, Shared Database, Remote Procedure Invocation, and Messaging. We’ll explore why Messaging is the right choice for Chronicle, examining the trade-offs each style brings. Then we’ll get our hands dirty: bootstrap the Gleam project, define our first Message type (the audit event), and build a simple CRUD API with a Point-to-Point Channel to an in-memory persistence layer. By day’s end, we’ll have the foundation of a real audit logging service, not just a toy example.

If you want some background on why messaging architectures matter before we dive in, Martin Fowler’s talk “The Many Meanings of Event-Driven Architecture” is a great primer:

Day 2: Channels, Endpoints, and Pipes & Filters

With our basic service running, we’ll deepen our understanding of the core abstractions. What makes a Message Channel more than just a function call? What’s a Message Endpoint and why does it matter? We’ll refactor Chronicle to properly separate concerns, implement our channel abstraction in Gleam using OTP processes, and introduce Pipes and Filters as our architectural style. These composable processing steps will carry us throughout the series. We’ll see how the BEAM’s actor model maps naturally to these concepts.

Day 3: Message Construction - Event, Document, and Command

Audit logs are about recording events, but not all messages are created equal. We’ll explore Event Message for our audit events (immutable facts about what happened), Document Message for data transfer (the actual audit payload), and Command Message for administrative operations (query, purge, replay). We’ll design Chronicle’s audit event schema: actor, action, resource, timestamp, and metadata. We’ll also implement Correlation Identifier for distributed tracing and Message Expiration for TTL handling.

Day 4: Channel Types - Point-to-Point and Publish-Subscribe

Channels come in different flavors for different needs. We’ll implement both Point-to-Point Channel for our command processing (exactly one consumer processes each message) and Publish-Subscribe Channel for event distribution (every subscriber gets a copy). We’ll build these abstractions in Gleam, discuss when to use each, and explore Datatype Channel (separate channels per message type) and Invalid Message Channel for quarantining problematic messages.

Day 5: The HTTP API Gateway - Channel Adapters and Messaging Gateways

Every system needs an entry point. We’ll build Chronicle’s HTTP API using Gleam’s Wisp framework. This serves as a Channel Adapter that bridges the synchronous HTTP world to our asynchronous messaging internals. We’ll implement proper request validation, return correlation IDs for tracking, and design our REST endpoints. We’ll also implement a Messaging Gateway to encapsulate messaging logic behind a clean interface and a Service Activator to connect our service to the messaging system. By day’s end, you’ll be able to POST audit events to Chronicle.

Day 6: The Message Bus - Kafka and Guaranteed Delivery

A single HTTP endpoint won’t scale, and in-memory channels don’t survive restarts. We’ll introduce a Message Bus using Apache Kafka to decouple producers from consumers and provide durability. We’ll cover Kafka fundamentals (topics, partitions, consumer groups) and implement Guaranteed Delivery to ensure no audit events are lost. This is critical for compliance. We’ll set up Durable Subscriber patterns so consumers can disconnect and reconnect without missing messages. For Kafka deep-dives, see Kafka: The Definitive Guide from Confluent.

Day 7: Message Routing - Content-Based Routing and Filtering

Not all messages go everywhere. Compliance events might need special handling; high-severity events might trigger alerts; internal debug events shouldn’t reach external systems. We’ll implement Content-Based Router to direct messages based on their content (event type, severity, source), Message Filter to drop unwanted messages (think PII scrubbing or noise reduction), Recipient List for dynamic routing based on subscription rules, and Splitter for breaking apart batch events into individual messages.

Day 8: Message Transformation - Translators and Enrichers

The real world is messy. External systems don’t speak our language, and raw events often lack context. We’ll build Message Translators to convert between Chronicle’s canonical audit format and external formats. This includes ingesting AWS CloudTrail events and exporting to SIEM systems like Splunk or Elasticsearch. We’ll implement Content Enricher to augment events with geo-IP data from client addresses, user details from an identity service, or resource metadata from a catalog. We’ll add Content Filter to strip sensitive data before external transmission, and discuss Canonical Data Model for system-wide consistency.

Day 9: Output Adapters - From Memory to Elasticsearch to DynamoDB

Time to persist our audit events! We’ll start by examining our existing in-memory data store as our first Channel Adapter output. Useful for testing, but not production. Then we’ll build real adapters: Elasticsearch for full-text search, aggregations, and dashboards; DynamoDB for point lookups and time-series queries with single-digit millisecond latency. We’ll explore how the same events flow to multiple destinations with different characteristics through Competing Consumers, and discuss the adapter pattern’s role in making Chronicle extensible. Want to add S3 archival or a webhook notifier? Just write another adapter.

Day 10: Advanced Routing - Aggregation and Scatter-Gather

Complex workflows need sophisticated routing. What if we want to combine multiple related audit events into a single summary? Or query multiple backends in parallel? We’ll implement Aggregator to combine related events (e.g., all events from a single user session), Resequencer to restore message ordering when parallel processing scrambles sequences, and Scatter-Gather for fanning out queries to multiple storage backends and merging results. We’ll also touch on Routing Slip for dynamic processing pipelines where the route depends on the message content.

Day 11: Error Handling - Dead Letters and Retry Patterns

Things will fail. Networks partition. Services crash. Databases timeout. Schemas evolve. We’ll implement the Dead Letter Channel for messages that can’t be processed after multiple retries. This is essential for debugging and recovery. We’ll add an Invalid Message Channel for malformed inputs that fail validation. We’ll discuss retry strategies (exponential backoff, circuit breakers) and implement Transactional Client for exactly-once semantics and Idempotent Receiver for safe retries.

Day 12: Observability and System Management - Wire Tap, Message Store, and Control Bus

We’ll wrap up with production-critical patterns that let you see inside the black box. Wire Tap for non-invasive monitoring: copy messages to an inspection channel without affecting the main flow. Message Store for event replay and audit trails, with every message persisted for compliance and debugging. Message History for debugging message flow, so you can see everywhere a message has been. And Control Bus for system-wide management: start/stop consumers, adjust routing rules, check health. We’ll tie it all together into Chronicle, a complete, production-ready audit logging system.

What You’ll Get

By the end of this series, we will have:

- A deep understanding of the Enterprise Integration Patterns that power it

- Patterns you can apply to any event-driven architecture, in any language

- Practical use cases with Gleam and the BEAM ecosystem

- Chronicle: a production-ready audit logging system you can drop into your SaaS product

The Repository

All code will be available on GitHub at jamescarr/chronicle. Each day will have its own branch so you can follow along incrementally, and the main branch will contain the complete system.

Let’s Begin

I’m excited about this project. Enterprise Integration Patterns gave me a vocabulary and a way of thinking about distributed systems that has paid dividends throughout my career. My hope is that by the end of these 12 days, you’ll have that same vocabulary, and Chronicle to show for it.

Tomorrow, we begin with Day 1: Integration Styles. We’ll explore the four fundamental approaches to system integration, discuss why messaging wins for our use case, and bootstrap our Gleam project.

See you then! 🎄

This post is part of the Advent of Enterprise Integration Patterns series. Follow along with the enterprise-integration-patterns tag or subscribe to the RSS feed.